About

This site is an educational demo showing how to chat with documents using Retrieval-Augmented Generation (RAG).

1. Components

| Layer | Technology | Role |

|---|---|---|

| Frontend | Next.js + Tailwind CSS | React app on Vercel; renders UI and calls API endpoints |

| Backend | FastAPI (Docker) | Exposes /ingest and /chat endpoints |

| Vector DB | PostgreSQL + PGVector (via Supabase) | Stores document embeddings for similarity search |

| Embeddings | Google Gemini text-embedding-004 | Converts text chunks into high-dimensional vectors |

| LLM | Google Gemini Flashlight | Generates answers given user query + retrieved contexts |

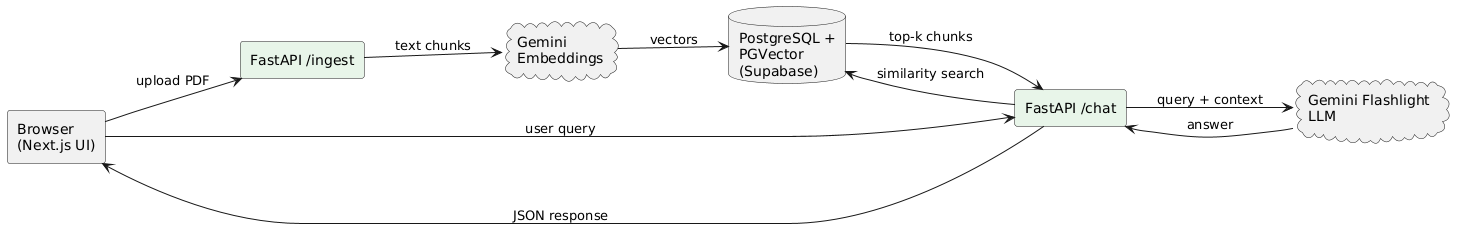

2. Data Flow

- Ingestion (

/ingest):- FastAPI receives a PDF upload

- Splits into text chunks → requests embeddings from Gemini

- Stores resulting vectors in Supabase (Postgres+PGVector)

- Chat (

/chat):- FastAPI receives user query

- Runs similarity search in PGVector → retrieves top-k chunks

- Formats those chunks into prompt context

- Sends query+context to Gemini Flashlight → gets answer

- Returns JSON response back to Next.js UI

3. Deployment

- Frontend on Vercel (auto-deploy from GitHub)

- Backend on Render Web Service (Docker)

- Database managed by Supabase (Postgres+ PGVector)

- Models via Google Cloud’s Gemini APIs (free tier for demos)

4. Links

Educational prototype only.